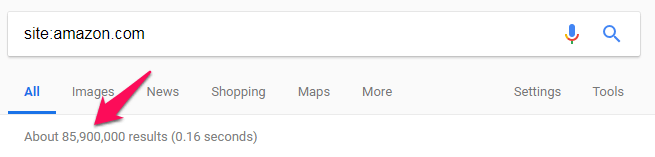

More than any other kind of site, e-commerce websites are well-known for developing URL structures that develop crawling and indexing issues with the search engines. It’s essential to keep this under control in order to avoid duplicate content and check the number of your pages Google reports as indexed. You can do this by running a “website: example.com” search on Google to see how lots of pages Google knows across the web.

More than any other kind of site, e-commerce websites are well-known for developing URL structures that develop crawling and indexing issues with the search engines. It’s essential to keep this under control in order to avoid duplicate content and check the number of your pages Google reports as indexed. You can do this by running a “website: example.com” search on Google to see how lots of pages Google knows across the web.

While Google webmaster trends analyst Gary Illyes has discussed this number is only a quote, it is the simplest method to determine whether something is seriously off with your site’s indexing.In regards to the number of pages in their index, Bing’s Stefan Weitz has actually also confessed that Bing … guesstimates the number, which is usually incorrect … I think Google has actually had it for so long that individuals expect to see it up there Numbers between your material management system (CMS)and e-commerce platform, sitemap, and server files should match almost completely, or a minimum of with any disparities resolved and discussed. Those numbers, in turn, need to roughly associate what returns in a Google website operator search. Smart on-site SEO helps here; a website developed with SEO in mind assists significantly by preventing duplicate material and< a href=https://searchengineland.com/google-makes-some-clarifications-related-to-mobile-first-indexing-300199 > structural issues that can produce indexing problems. While too couple of lead to an index can be a problem, too many outcomes are likewise a problem since this can imply you have duplicate material in the search results. While Ilyes has actually validated that there is no”duplicate content penalty,” duplicate content still hurts your crawl budget plan and can also dilute the authority of your pages across the duplicates. If Google returns too few outcomes: Look for a representative sample of these pages in Google to determine which are in fact missing out on from the index.(You don’t have to do this for every single page.)Recognize patterns in the pages that are not

essential to strong indexation and have actually been covered in depth in other places, but I would be remiss if I did not discuss them here.I can not worry how essential a comprehensive sitemap is. In truth, we seem to have reached the point where it is a lot more essential than your internal links. Gary Ilyes just recently confirmed that even search results page for”head “keywords(

instead of long tail keywords) can include pages with no inbound links, even no internal links. The only method Google could have learnt about these pages is through the sitemap. It is necessary to keep in mind Google and Bing’s standards still state pages ought to be reachable from at least one link, and sitemaps by no ways disqualify the value of this.It’s equally essential to make certain your robots.txtfile is practical, isn’t really blocking Google from any parts of your site you want to be indexed, and that it states the location of your sitemap( s ). Functional robots.txt files are extremely

essential since if they are down, it can trigger Google to stop indexing your site altogether inning accordance with Ilyes.DYK that if Googlebot cannot access the robots.txt file due to a server error, it’ll stop crawling the website entirely? pic.twitter.com/ExhB2Mu5rg!.?.!— Gary “鯨理” Illyes(@methode)< a href="https://twitter.com/methode/status/832116735750897664?ref_src=twsrc%5Etfw"> February 16, 2017 an user-friendly and logical navigational link structure is a should for good indexation. Apart from that every page you want to get indexed must be reachable from a minimum of one link on your website, good UX practices are essential. Classification is central to this.For example, research study by George Miller of the Interaction Design Structure recommends the

human mind calculation. Bing advises the following: Keyword-rich URLs that avoid session variables and docIDs.A highly practical website structure that encourages internal linking.An arranged content hierarchy.3. Get a handle on URL criteria URL parameters are a typical reason for” limitless areas”and duplicate content, which severely limits crawl budget and can water down signals. They vary added to your URL structure that bring server guidelines used to do things like:

- Sort items.Store user session information.Filter items.Customize page appearance.Return in-site search results.Track advertisement projects or signal details to Google Analytics.

If you use Screaming Frog, you can recognize

URL parameters in the URI tab by selecting “Criteria” from the”Filter “drop-down menu.Examine the different kinds of URL parameters at play. Any URL specifications that do not significantly impact the content, such as advertisement project tags, arranging, filtering, and individualizing, must be handled utilizing a noindex directive or canonicalization (and never ever both ). More on this later.Bing likewise offers a

- convenient tool to disregard choose URL criteria within the Configure My Website area of Bing Webmaster Tools. If the criteria substantially impact the material in a manner that develops pages which are not replicates, here are a few of Google’s recommendations on proper implementation: User-generated worths that do not considerably affect the material

should be positioned in a the page indexed, where case noindex should be used.Do not use noindex and canonicalization at the very same time

. John Mueller has warned versus this due to the fact that it could potentially tell the search engines to noindex the canonical page in addition to the duplicates, although he stated that Google would more than likely treat the canonicaltag as a mistake.Here are things that ought to be canonicalized

: Here are things that I recommend be noindexed: Any membership locations or personnel login pages.Any shopping cart and thank you pages.Internal search result pages . Illyes has actually stated “Generally, theyare not that useful for users and we do have some algorithms which aim to eliminate them …”Viewpoints revealed in this article are those of the guest author and not necessarily Browse Engine Land. Staff authors are listed here.

Leave a Reply